Release Notes

Table of Contents - Release Notes

Q3'24 Release Note

NEW FEATURES

- Game control / Joystick user input: Now you have the option of incorporating game controllers and joysticks into your study! Participants can move objects and interact with the experiment as specified by your design via the Gamepad/ Joystick Trigger and you can record data, ie. what key they clicked and more using the Gamepad/ Joystick trigger-specific values. Also check out this sample study walkthrough of it how it can be implemented!

- Participants Tab: With Labvanced, you can manage and communicate with your participants via the newly added ‘Participants’ tab. Using the ‘Participants’ tab, you have an overview of your subjects for a particular study, customize the emails that are being sent during the course of the published study, as well as connect your email address using the SMTP Server. Essentially, you can use this tab to manage communication with your study participants taking part in your research which is especially useful for longitudinal research with multiple sessions.

- Multi User Study Features: Multi user studies require a lot of data to be communicated between participants in real time. In very large studies, where hundreds of participants can access a study at once, this can place a big burden on servers which can impact study integrity. For this reason, we have implemented the following safety measures:

- ‘rate limiter strategy’ where you specify how data is handled in the event of overflowing the server under the distribute variable (scroll down for the description of the options of this feature)

- Additionally, there is now an option in the Study Settings tab to specify how many sessions can occur in parallel

- More Text Objects: The following additions have been made to the editor to give you more control over design and presentation:

- DisplayVariable Element: This element allows you to link directly and call on variable values to display within the experiment.

- DisplayHTML Element: If you need to utilize HTML and display it within the study, you can make use of this element.

- SVG stimuli assignment via data frames: Researchers utilizing SVGs in their studies can now assign them to trials via data frames.

IMPROVEMENTS

- Flashing Retry Screen: A problem where if the eye tracking calibration failed and upon retrying the screen would overlay the calibration points (during the second phase of the calibration where there is a circular pattern) is now fixed.

- Duplicate First Sessions: A problem where participants that were added in the Participants Tab had 2 sessions (instead of one) created for their first experiment is now fixed.

LIBRARY HIGHLIGHTS

What’s new in the library? Here are a few new studies that you can explore and consider importing and working with for your next experiment:

- Animal Word Search (multi-user): In this demo, two players compete as to who can find the most words in a word search puzzle! Try now! (Note: Copy paste the URL in a second tab and play against yourself if you just want to test it out on the fly!)

- ChatGPT: In this Labvanced demo, you can communicate directly with ChatGPT. Simply enter your prompt and hit the ‘Enter’ key to send. Try now!

NEW DOCS

- Chat GPT Study: This walkthrough explains how to build a study where the ChatGPT feature can be incorporated in a design where a chat interface displays and records the exchange / conversation between the participant and ChatGPT.

- SVGs as AOIs in Eye Tracking: In this walkthrough, you can see how an eye tracking study incorporates SVG objects to collect gaze data over Areas of Interest (AOIs).

- Multi-user Show Cursor Location: Are you working on a multi-user study where you want participants to see each other’s cursor location? In this walkthrough, we present two approaches to how you can accomplish this in Labvanced.

NEW PUBLICATIONS

Want to see what other researchers are working on using Labvanced? Check out this list of some examples of newly published research:

- Visual Perception Supports 4-place Event Representations: A case study of TRADING in the Proceedings of the Annual Meeting of the Cognitive Science Society (Vol. 46) by Khlystova, E., Williams, A., Lidz, J., & Perkins, L. (2024).

- What Makes a Movement Human-Like? in Japanese Psychological Research by Yang, X. et al. (2024).

- Inherent Linguistic Preference Outcompetes Incidental Alignment in Cooperative Partner Choice in Language and Cognition by Matzinger, T., et al. (2024).

- Scrolling and Hyperlinks: The effects of two prevalent digital features on children's digital reading comprehension in Journal of Research in Reading by Krenca, K., Taylor, E., & Deacon, S. H. (2024).

UPCOMING

- Task Wizard

- Desktop App with SLS connection

- Phone App for Android

- Improved fixation detection algorithm (eye tracking)

Q2’24 Release Note

NEW FEATURES

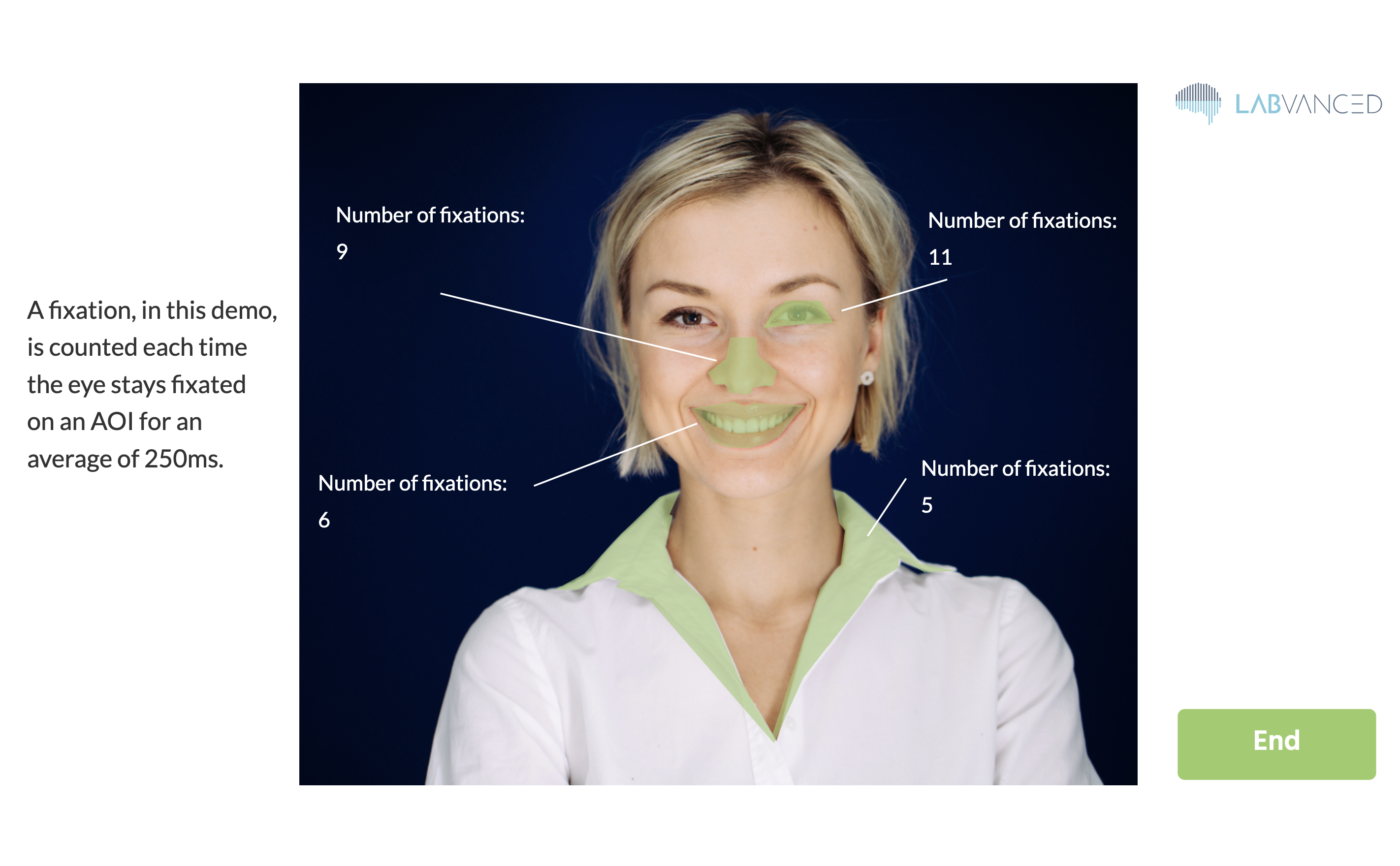

- Complex shapes as AOIs for webcam-based eye tracking using the SVG/Polygon objects: In the past, AOIs or ‘masks’ could only have been created using rectangle shapes. This isn’t really suitable for complex shapes. With this new feature which is now available, you can utilize SVG and polygon objects to create AOIs/masks for complex shapes which can then be used as ‘triggers’ and/or ‘variables’ throughout your experiment! For example, you can specify silhouettes, (like people, facial features, or animals) within a complex scene, as your AOIs by uploading SVGs or tracing them within Labvanced using the Polygon object.

- As a side note, thank you to all the researchers that have been using our webcam-based eye tracking as a part of their research methods! We have really seen this number grow since we published our peer-reviewed paper on it a few months ago.

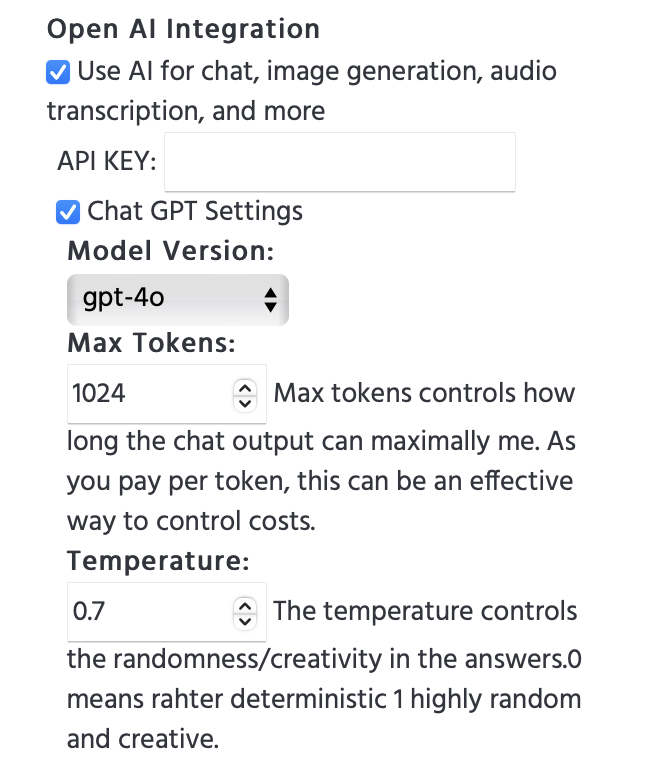

- ChatGPT: A new integration that allows you to connect OpenAI / ChatGPT with Labvanced. Essentially, participants can write in Labvanced using the input field and the response from ChatGPT will be shown right in the experiment! This allows you to collect data about how these interactions are set, but also perform advanced analysis in the background while it’s happening!

- Under ‘Study Settings’ → ‘Experiment Features’ column, you can make use of the OpenAI integration today:

- Task Wizard: Now, when you create tasks in a new study, you have the Task Wizard available to help you get started. You can specify your overall study structure, including whether you want questionnaires for demographic collection, as well as navigation between the tasks, with just a few clicks, ultimately saving you time for when you are getting started!

IMPROVEMENTS

- Visually separating ‘Factors’ & ‘Randomization’ in the editor: If you have been building your experiment in Labvanced recently, you may have noticed that the left panel menus have slightly changed. This has been done to improve the language and terminology, but the inherent data model and structure has remained the same with random factors and fixed factors working as before.

- Smartphone App: The smartphone App has further been evaluated under beta with even more improvements and is expected to go fully live in the upcoming quarter. Now, there is also a library of studies that you can access through it on your phone and start exploring phone-based studies.

NEW DOCS

- Mental Rotation Task | A Spatial Processing Task: An in-depth article about the mental rotation task, including examples of it being used in research!

- 7 Classic Cognitive Tasks: Here, we highlight 7 classic and popular tasks used in cognitive psychology. Is there anything you’d include that’s not already listed?

- Music Research with Labvanced: What are other researchers using Labvanced doing in the field of music psychology? This blog post gives an overview of just that by focusing on relevant publications and their research methods!

NEW PUBLICATIONS

- Beyond built density: From coarse to fine-grained analyses of emotional experiences in urban environments in the Journal of Environmental Psychology by Sander, I., et al. (2024).

- Processing of visual social-communication cues during a social-perception of action task in autistic and non-autistic observers in Neuropsychologia by Chouinard, B., Pesquita, A., Enns, J. T., & Chapman, C. S. (2024).

- Multimedia enhanced vocabulary learning: The role of input condition and learner-related factors in System by Zhang, P., & Zhang, S. (2024).

- How self-states help: Observing the embodiment of self-states through nonverbal behavior in Plos One by Engel, I., et al. (2024).

UPCOMING

- Longitudinal studies - email customization: In the past, email reminders were automatically sent based on the time interval settings you specified. Now, we are extending this capability with the additional option of allowing you to customize the text in the automated email reminders for longitudinal studies.

- Desktop App 2.0: A new and improved version of the Labvanced desktop app will be available very soon! The desktop app will have many useful features, like being able to work in online/offline modes, allowing you to work and make recordings locally in your lab, as well as easily connecting with external devices like EEGs.

Q1’24 Release Note

NEW FEATURES

- Labvanced Mobile now in Playstore! (BETA): You can run Labvanced studies now in our dedicated Android app (with just 1 click), giving you even more experimental control and design options. The smartphone app will be ideal for longitudinal testings and clinical studies, but all studies can be opened if designed for mobile use cases. We are now looking for more Beta testers, so please contact us if you are interested! Also, the iOS version will be coming soon too!

- Email verification: New users now must verify their email address upon sign up. Monospace font now available: When adding text elements to your study, you can now select monospace as a font option.

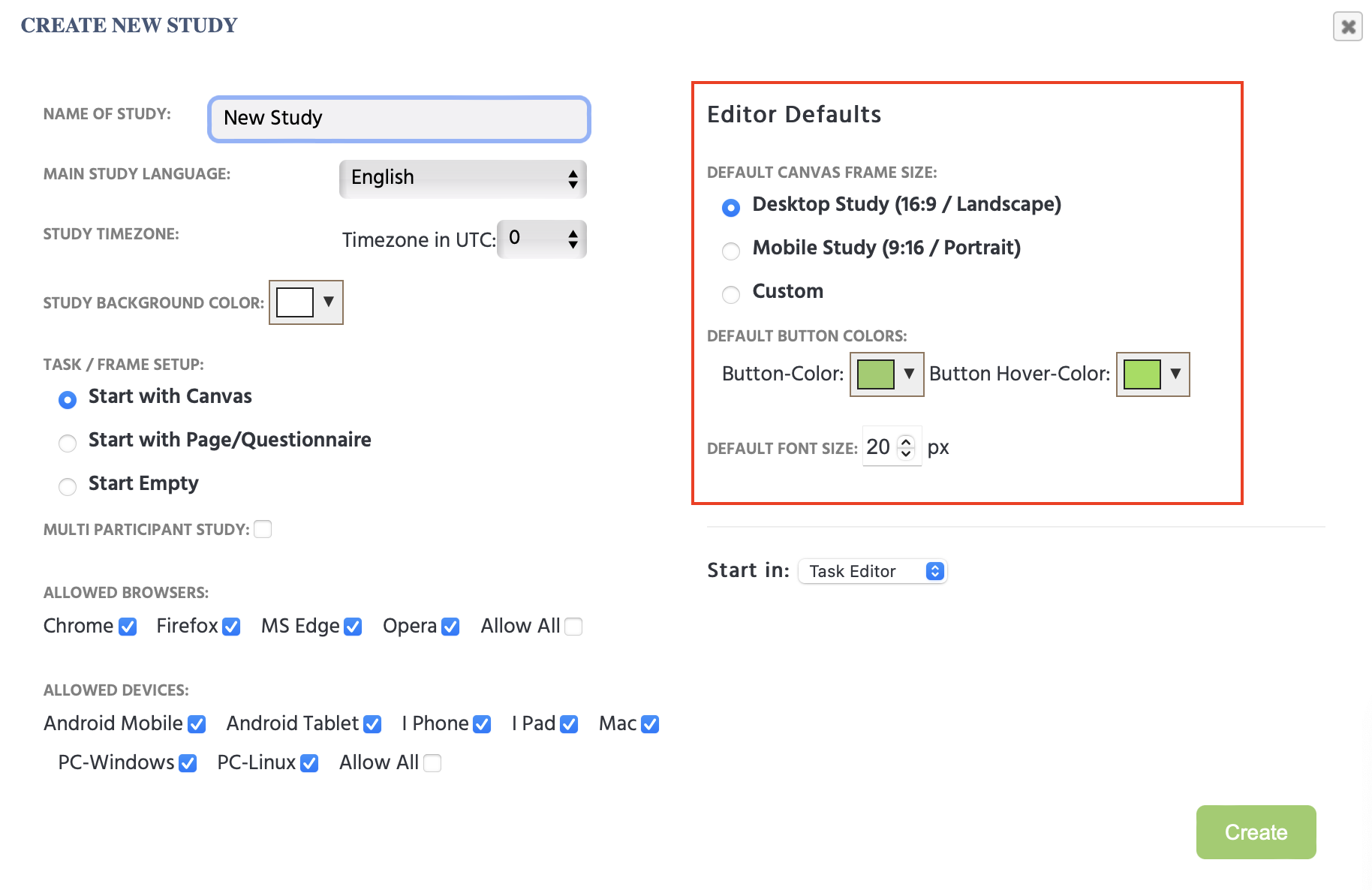

- Default styling for frames: As shown in the image below, you can now set up your new study by specifying defaults for your frames, like setting up frame size for mobile studies with an aspect ratio of 9:16.

- Default styling for study buttons: Also shown in the image below, when creating a new study you can set up a default option for buttons so you don't have to keep styling everytime you add a new button to the study. The selected default settings will create a stylized button automatically.

- Email / notification nurturing: Now released - emails and app notifications are now live, aiming to suggest and give new ideas and assist with onboarding new users as well as help with the overall app experience.

IMPROVEMENTS

- Slide element now improved: The slider element (used for questionnaires) now works now in all cases, even when the slider handle is hidden.

- Improved security: If the password is changed now you are logged out of all devices and all the sessions on all devices are invalidated.

- Changing your email automatically: Changing the email associated with your account can be done easily now via email verification.

NEW DOCS

- Randomization & Balancing: Discover how randomization and balancing are addressed in Labvanced and which parts of the app you can use to ensure your experiment is randomized and balanced.

- API Access: What are the different APIs that you can use for your Labvanced experiment? From the Webhook API, to WebSock and REST API, we’ve got you covered! Learn more about the options and the circumstances under which you would use them in this API overview.

- Infant-friendly & Remote Eye Tracking: What is the state-of-the-art when it comes to remote and infant-friendly eye tracking? In this blog, we discuss the challenges and solutions for using remote eye tracking as a method in developmental psychology studies.

NEW PUBLICATIONS

How are other researchers using Labvanced? Here are some highlights of recent publications!

- Parallelisms and deviations: two fundamentals of an aesthetics of poetic diction in Philosophical Transactions of the Royal Society B by Menninghaus, W. et al. (2024).

- Touch and look: The role of affective touch in promoting infants' attention towards complex visual scenes in Infancy by Carnevali, L., Della Longa, L., Dragovic, D., & Farroni, T. (2024).

- Effects of a frontal brake light on pedestrians’ willingness to cross the street in Transportation Research Interdisciplinary Perspectives by Eisele, D., & Petzoldt, T. (2024).

- Effects of vertically aligned flankers during sentence reading in the Journal of Experimental Psychology: Learning, Memory, and Cognition by Mirault, J., & Grainger, J. (2024).

UPCOMING

- ChatGPT and further AI integrations: Interested in how people use AI such as chatGPT or DALL-E? We believe that research about AI usage will explode over the next few years, and hence we will provide native integrations with chatGPT and other well known AI applications soon.

- Desktop App: If you are a regular user of Labvanced, you will love to see that a dedicated application (for Windows, Linux and Mac) will be released soon which has some advantages over the pure browser-based version of Labvanced.

- All studies are directly available both online and locally, combining the best of both worlds.

- LSL integration and other dedicated hardware connection solutions through the desktop app will be coming next, enabling you to run EEG studies, connect to third party eye-tracker and more.

- More native integrations, for data export, data analysis will be available in the desktop version. Which feature is most exciting to you?

- Eye Tracking - SVG Area: With this new feature, you will be able to use complex shapes as your defined Area of Interest for eye tracking studies. Currently, you can use simple shapes (like a rectangle image) as the Area of Interest. With this new feature, you can upload an SVG of a complex shape (like an elephant) and can create your own invisible masks and count the number of fixations in that area in eye tracking studies. In the future, you will also be able to trace an area.

- PageGazer: Understanding how online users behave and make decisions has strong implications for UX designers, marketeers, e-commerce owners, and even for product management and policy makers.

- However, existing tools that offer to study consumer behavior on websites have clear shortcomings. The 3 most severe limitations are: 1) Requiring additional installations such as browser plugins, strongly limiting who can be a participant. 2) Treating the entire website like a picture or video leading to an inability of tracking interactions or aggregating data across subjects. 3) Only offering mouse tracking, but no way to track attentional processes using eye-tracking technology.

- PageGazer, is a new and powerful tool for online behavior and consumer research that we are developing which will be able to overcome these limitations and thereby vastly expand the kind of data that can be collected and insights that can be gained in remote data collection scenarios.

- If this sounds interesting to you, please subscribe to our PageGazer newsletter to stay in the loop of the latest announcements.

Q4’23 Release Note

- New & Improved Desktop App- Almost here: Bring Labvanced directly to your desktop. A revamped and user-friendly app will soon be available for researchers to download directly to their desktop for in-lab research. Local studies can directly be auto-synchronized with the latest online state, so that study creation/editing and local (offline) execution will merge with each other. Importantly, our ready-made LSL Python scripts can be used here to easily connect with external hardware such as EEG systems during local recordings. Our vision is to offer a single application that can easily be used to conduct both online and local / in-lab recordings while using the same study and code-base instead of re-implementing and running things in various environments. And this is just the start!

- Smartphone App: Beta Testing: Please contact us if you are interested in testing out the smartphone app in its beta stage. Ideal for researchers who want to use this feature in the future and provide feedback. The smartphone app will be available for Android and iOS which will give researchers more opportunities and control over their experimental design and execution in mobile testing scenarios. The app will have two basic access points:

- A login for researchers who can sign in and manage their studies and recordings and treat it as an admin portal (also to start recordings). This can be particularly useful for onsite and/or clinical research.

- A (password-free) remote login for participants who can use a deep link to open the app with a specific Labvanced Study ID, transforming the app into a customized application for a particular study. In other words, you can create your study on Labvanced as usual, but run it as a mobile native app for data collection purposes. This of course works also in combination with crowdsourcing tools like Prolific or mTurk. The smartphone app is particularly useful for longitudinal studies, ie. studies with multiple sessions, for decentralized clinical trials or training sessions where educational intervention is a main component. Automatic push notifications remind the participants to complete the next session study in a timely manner.

Note: While the smartphone/desktop app is not licensed as a medical product (yet), it can be used in scenarios where participants come into the lab, clinic, or the doctor's office to do an on-site test without relying on an internet connection. The data will be recorded locally on the device and automatically uploaded to the cloud once internet connection is restored.

- In case you missed it, the Labvanced webcam-based eye tracker has been peer-reviewed! See this paper in the journal of Behavior Research Methods and consider citing it in your next publication! These are some key findings from it:

- Labvanced’s webcam-based eye tracking has an overall accuracy of 1.4° and a precision of 1.1° with an error of about 0.5° larger than the EyeLink system

- Interestingly, both accuracy and precision improve (to 1.3° and 0.9°, respectively) when visual targets are presented in the center of the screen - something that’s important for researchers to take into account given that the center of the screen is where stimuli are presented in a lot of psychology experiments.

- For free viewing and smooth pursuit tasks, the correlation was around 80% between Labvanced and EyeLink gaze data. For a visual demonstration of how this correlation looks like, see the Figure 7 in the research paper which visually shows the overlap of the data points between Labvanced (blue dots) and EyeLink (red dots).

- Also, the accuracy was consistent across time. Overall, these results show that webcam-based eye tracking by Labvanced is a viable option for studying the physiological signals of attention.

- In Progress:

- Advanced Email & System Notifications: Soon, users will receive customized advice on how to make most of the platform based on customized suggestions delivered through email and notifications.

- Study Wizard: The wizard is a step-by-step interactive guide that will help new users to build a study to be configured with a spreadsheet.

- ET Fixations: Our peer-reviewed ET publication demonstrates our excellence in webcam based eye-tracking, providing arguably to most accurate gaze data using this method. And while other solutions do exist, it seems that none of them even thought about proper fixation detection (probably because their data is too noisy and has too few samples). Classifying fixations is however a very important aspect of eye-tracking research, and while we already have an algorithm available, our next goal is to improve it substantially. For this, recruiting and collaboration efforts are ongoing.

Q3’23 Release Note

- Our New Publication on Our Webcam-based Eye Tracking: We are happy to announce that our latest publication which compares the accuracy of our webcam eye-tracking system to an industry standard (EyeLink 1000) is finally out. While this took us quite some time and resources, it verifies our methodology in a very good journal and we are extremely happy to share this with you today! A big thank you to all the researchers who already used our eye-tracking and a big invite to everyone else to do the same! Check out the publication here in the journal of Behaviour Research Methods.

- Key findings from the publication:

- Labvanced’s webcam-based eye tracking has an overall accuracy of 1.4° and a precision of 1.1° with an error of about 0.5° larger than the EyeLink system

- Interestingly, both accuracy and precision improve (to 1.3° and 0.9°, respectively) when visual targets are presented in the center of the screen - something that’s important for researchers to take into account given that the center of the screen is where stimuli are presented in a lot of psychology experiments.

- For free viewing and smooth pursuit tasks, the correlation was around 80% between Labvanced and EyeLink gaze data. For a visual demonstration of how this correlation looks like, see the Figure 7 from the publication which shows the overlap between the data points between Labvanced (blue dots) and EyeLink (red dots).

- Also, the accuracy was consistent across time.

- Key findings from the publication:

- Awarded European Network of AI Excellence (ELISE) Funding: As AI researchers that have brought you accurate webcam-based eye tracking, being awarded with the European Network of AI Excellence grant (ELISE) helps us make the next step in providing you with more opportunities for online research through the development of PageGazer. While using the same underlying technology, PageGazer will be a new platform that focuses on performing UI/UX, marketing, and general website research. PageGazer will have the capabilities to include our innovative eye-tracking technology into any website, and augment this with smart website parsing to derive new research insights. The Elise funding will help us to achieve this goal faster and more efficiently as well as verifies again the excellence and innovation of our approach. If you want to know more or be part of an EXCLUSIVE and free BETA TEST phase for PageGazer please contact us, we are currently looking for first users who are excited to try out something new!

- New Features:

- Canvas (Free Drawing) Element: The canvas (free drawing) element is now available as a shape object that you can use in your experiment to draw freely with shapes, strokes, or text. This allows you or participants to input free form responses in an experiment.

- Media Elements on Questionnaires: Now, it is possible to insert media elements, such as videos, directly into questionnaires (ie. page frames). This allows you more control over your experimental creation process by being able to place different types of visuals directly into the survey.

- Resting API Integration: Now available, the Resting API allows you to download data programmatically. You just create an API token in the application to authenticate via API client which allows you to access the data immediately via the server. Researchers have already begun using this new capability as it allows them to streamline their process even more by being able to access data locally. The Resting API is great for organizations and academic research institutions who want to centralize their data fetching and store their data in dedicated places as per their pipeline protocol. Instead of selecting and downloading data manually and saving it locally, this allows you to access the data via your servers which is important for GDPR protocol.

- Improvements:

- Server-side improvements: While not directly visible to you in your day-to-day experimental creation and process, our latest server-side improvements have a big impact for online studies because they ensure improved performance and reliability. A few examples of the improvements we made include: increased server capacity, more automated updates, an automatic failover if one server fails, and better monitoring services for assessing the healthiness of the servers.

- New payment flow: The new payment flow is here! For new license oncomers, payment is now required prior to activating the license. A few other related improvements include: improved UI, a secure public payment link which allows another individual to pay for your license (important for purchasing departments), reopening an order, and automatic detection of payments via bank transfer through reference code recognition.

- In Progress:

- Smartphone app: With a mobile up, clinical trial and longitudinal studies can make more use out of Labvanced through features like push notifications to remind participation and not relying on the internet for certain tasks.

- Desktop app: With LSL integration and online/offline (ie local) modes combined in a desktop app, you can connect Labvanced to local devices like EEG with LSL integration.

- Email nurturing and notifications: Soon, users will receive customized advice on how to make most of the platform based on customized suggestions delivered through email and notifications.

- New Study Wizard: To ensure accelerated study implementation, a study wizard will be available for new users that experience a learning curve when first joining Labvanced in order to get their study to where it needs to be!

Q2’23 Release Note

- Research Grant Program: A new and exciting opportunity for all researchers out there, this grant program is perfect for those who want to boost their careers and credibility with a small grant that will support their research in Labvanced. Benefits include financial support for attending a conference, as well as recruiting study participants.

- Free Drawing Element: Due to popular requests, you can now draw directly in the editor thanks to the newly added Canvas Drawing element. This new feature allows the experiment creator and participant to draw freely but also insert shapes like circles and triangles. This feature can be relevant for many experimental use cases like free association.

Automatic Subject Reassignment: Stronger randomization and balancing This request, which was placed by many researchers who wanted stronger balancing methods, is here! Now, automatic subject reassignment is possible in Labvanced. What does this mean? Consider if, let’s say, subject 17 drops out of the experiment, the system can now automatically assign a subject to fill this subject number. Why is this important? Because in many experiments the subject number plays a role in the type of stimuli that the participant sees. In the past, the system didn't take into consideration dropouts on a stimuli level but rather on the group level. As a result of this feature, randomization has become more powerful. Another example of this feature at work comes during manual discarding. If the researcher manually discards a participant, for whatever reason, the next participant that is newly enrolled in the study can automatically fill the spot of the discarded participant. This ensures that the experiment is completed and that the researchers receive all the data they need. - Switch toggle for eye tracking to make it kid-friendly: In the study settings for eye tracking, there is a newly implemented switch toggle that can enable kid-friendly eye-tracking. This switch toggles and will turn this setting on and off. Before this update, it was just a checkbox and once it was turned on, it was not possible to switch off.

- Safari is now by default disabled as a browser: Safari is an odd browser that doesn’t always act as expected, especially when it comes to innovative browser-based technologies such as Labvanced. We have disabled Safari as an option for running experiments (yes, it can be turned back on and be made available). The reason for this decision was because more advanced features like eye tracking and audio autoplay were not working so well on this browser, thus Safari is not recommended for experiments using such advanced features.

- Joined Audio/Video and Object triggers into a single trigger: The editor UI has been updated so that the trigger for Audio/Video is the same as the Object trigger. Based on the type of trigger, a different drop down menu with specific options will appear. This helps make the experiment creation process more streamlined and reduces the need of performing multiple steps over and over again. Please note that existing studies using the now retired Audio/Video trigger are not affected.

- Joined Audio/Video and Control Object actions into a single action: Similar to the point above which is about triggers, the same has been done for the corresponding actions for Audio/Video and Control Object. Please note that existing studies using the now retired Audio/Video trigger are not affected.

- Easier sharing for group license holders: If you have a group license, it is now easier to share studies with people who have the same classroom license or redemption code. This works for 6 months after the code has been activated, even if they have a different license or have only a free account.

- In Progress

- New Resting API: This is a standard API that is generated and will work with a code, allowing you to login and download all of your data programmatically. The Resting API will be great for organizations and academic research institutions who want to centralize their data fetching and store their data in dedicated places as per their pipeline protocol. Instead of selecting and downloading data manually and saving it locally, this allows organizations to access the data on their servers which is important for GDPR protocol.

- Onboarding: We are still working on the onboarding process where new users can learn in an interactive manner as soon as they create a new account.

Q4’22 Release Note

New Dashboard: We have launched a new dashboard, stronger than ever before, designed to help you master the Labvanced platform and stay aware of all of the latest updates. On the new dashboard, you can find:

- Checklists specifically designed to be onboarding tools

- Study insights to help you keep track of your research progress

- Videos arranged in the order that best teaches you the LV platform

- Notifications, library updates, and twitter feed all in one place

Action Groups: Events have two major components, a variable and a trigger. Oftentimes, a trigger requires multiple actions to be performed. For example, if a participant clicks a button, a stimuli needs to be enlarged, change position and fade away. Now, you can group these three actions (and more depending on your experiment) into a single group! This helps for organization and copying the actions so that you can work more efficiently when creating an experiment.

Shared Variables: Shared variables are dynamic variables which can be shared across several experimental sessions and/or participants. The variables are stored on the Labvanced server, working as an array to help you unlock a new level of experimental possibilities when creating and planning your study. The shared variables are particularly useful for longitudinal studies, but also multi-user studies, and even help with between-subjects balancing. Here is an example of shared variables in action: Imagine your study has 10,000 images and you want to show 100 images to each participant, with the shared variable you can ‘remember’ which of the 100 images were shown and randomly assign the remaining images to other participants. Pretty cool, right?

'Read from/Write to' Action Command: This is a new action that can be added to your variables for recording (ie. 'Read from') and saving (i.e. 'Write to') data. Now you can use the following options to record and save variable values:

- Option 1, Device: This option reads variable data and saves it on the local device. This is useful for data that needs to be stored locally. For example, if you are running a longitudinal study and need to ensure participant's they are using the same device across the entire experiment.

- Option 2, Shared Variable: Reading and saving data to shared variables is a very powerful option. In this case, the data is stored on the servers and can be distributed to other trial sessions, as well as participants, depending on what you want to do.

Custom CSS in the Task Editor: The CSS properties of an element can be changed in the Object Properties tab by clicking on the element and checking the box “change CSS properties.” This adds the ability to write custom code for an object to change its appearance in more specific ways than using the object properties window alone. See an example of how the Custom CSS works in the task editor. This is the first iteration and more improvements are to come.

Home Page: New & Improved: The Home Page has received a massive make-over! It contains plenty of new information about the Labvanced platform, use cases, testimonials, and the ability to request a support call with a special dialog box that opens up, as well as a newsletter field where you can sign up to receive updates and release notes such as this one.

Eye Tracking: Faster & More Accurate: Probably our most exciting announcement in this entire release note, our webcam-based eye tracking has received a major upgrade, allowing you to reach new heights of temporal accuracy in your research!

- First, in order to reach this new level of accuracy, we first had to do some cleaning up. We completed a big refactor of the repository, so a lot of classes and files were organized in order make the neural network run more optimally. Due to this step, we reduced some functions that were unnecessary, leading to more efficient code.

- Second, in order to develop a faster inference method for predicting gaze, we increase the number of images that were fed into the neural network during the sampling process. Before, we took a snapshot based on time, such as every 30 ms. But, it wasn’t clear exactly when the webcam’s Hertz frequency was refreshing. Now, we have harnessed new browser innovations that allow us to know exactly at what point a new camera image occurs (on a microsecond level) using the GPU timestamp of the refresh rate and incorporated this into our algorithm, as a result we know exactly when the refresh rate occurs based on the (now available) GPU timestamp and we know when the camera snapshot was taken on a microsecond level, temporal resolution is more precise leading to more data

- Together, this allows you to have an improved and more powerful calibration process where instead of relying on a single snapshot per time point, we can acquire three! Furthermore, this allows us to better handle 60Hz webcams, leading to better data quality, and ultimately opens the door for working with eye saccades using remote eye tracking!

Media Elements on Page Frames: Due to popular demand, you can now add media elements on page frames! In the past, this capability was limited only to canvas frames where editing is more open-ended. Thanks to user-feedback, page frames (used for building questionnaires) can now also present media elements such as videos. Try it out! Go to the editor, add a page frame and upload an audio recording (or whatever element you’d like) and attach questionnaire elements (like a Likert-scale or a slider range) to go with it.

Public Experiment Library: The Public Experiment Library is a powerful and open resource available to all Labvanced users! As you might already know, the PublicExperiment Library contains hundreds of studies from the Labvanced team and other researchers in the community that wish to make their experiment available publicly. With new additions and expansions, the Public Experiment Library has been updated to be more powerful and user-friendly. Now, the searching capabilities are more powerful, the criteria are more informative, and there are more options available. You can view, participate, import studies into your own experiment and modify it if necessary to suit your experimental goals. You can also conduct an advanced search to browse through studies to meet your criteria, such as including eye-tracking or being a multi-user study. You can even give a study a ‘like’! Learn more about the search features and the general functions of the Public Experiment Library.

Description Tab Updates: The Description Tab is a part of the editor menu that you can access to control how your study is presented and what kind of information is provided in the Public Experiment Library. New additions allow you to:

- Create custom affiliations and to search for universities to add as affiliations

- Add keywords to describe a study, such as ‘spatial cognition’ or ‘intelligence’

- Specify branch(es) of Psychology that are relevant to your study, such as ‘cognitive psychology’ or ‘social psychology’

- Create a unique Public Study Name to be displayed, now it can be different from the study name shown in your account.

'My Account' Tab Updates: Under the ‘My Account’ tab on the editor, you are now able to see a message when your account is expiring and are presented with an option to renew the account right from that page. You can also fill in more information about your research background, such as your position and field in psychology.

Import Options: Data Frames: Importing data frames is now easier than before as we have added new options and improved the way that data is handled. Now, you can directly map files and use the first row from your spreadsheet as headers and transpose data. If you are importing large sets of data, the data frames can map files and images in your experiment. For example, if you have your visual stimuli written out in a column, such as ‘cat.png’ and in another column you specify another variable, such as trial number or Subject ID, this can be immediately mapped out directly from the importing step.

Study Settings: New Options When you have a study selected, the ‘Study Settings’ tab becomes visible on the side-menu of the editor. Under the ‘Study Settings’ there are now a few new capabilities you can have to ensure even more experimental control over your study, now you can:

- Block “dark mode” theme: This is particularly useful if you allow experiments to be completed on mobile devices where the ‘dark mode’ can cause incorrect CSS changes on some mobile devices.

- Enable or disable “start study in fullscreen mode”: Some researchers wanted this flexibility to have the option of not starting immediately in fullscreen mode, now it is possible.

- Require a minimum and maximum screen refresh rate: Specifying the limit of the screen refresh rate you want to permit in your study is Important for precise timing. Since screens can have different refresh rates, for example a new computer can have a 150 Hz monitor vs. an old 45 Hz monitor, you can limit who participates in your study based on this hardware parameter.

Increased Sharing Abilities for Departmental License Holders: There are now different study sharing abilities between department licenses and group licenses. Department licenses have more sharing privileges which includes giving editing ability of a study to a user who is not a member under the department license but has their own group license. By contrast, group license holders can only share a study with users who fall under their license. This change allows two groups that are using Labvanced to have a shared project.

New Sample Studies Page: The new Sample Studies Page is a powerful resource, a page dedicated to highlighting some of the best studies on the Labvanced platform. Essentially, these are templates organized by psychology use cases (everything from behavioral psychology to cognitive psychology to sports psychology and more) so that you can import these templates and get started with your study. The Sample Studies page is dedicated to sharing not only useful studies and tasks, but also showcasing useful demos and add-ons. All studies and content on this page have been checked and validated by the Labvanced team. For more information about how to make use of this page, read the dedicated blog post explaining its in’s and out’s: Sample Studies - Helpful Templates & Demos!

In Progress:

- Onboarding: We want to improve the user journey as much as possible, to help all researchers understand the power and usability of Labvanced. In this release note we announced a new dashboard, but up next we have an onboarding process in the works! What does this include? Upon signing up and logging in to the platform, there will be a moving dialog box that shows you step by step where you need to click in order to create an experiment from start to finish! This interactive and dynamic approach will help users learn the platform faster and gain more confidence in building a study online.

- Improved Balancing: We got the following request from a lot of people to improve balancing methods. Consider an experiment designed with 100 columns, where there is one column per subject and one row per trial. Soon, there will be a possibility for an optional timeout and automatic subject reassignment, as well as the ability to manually discard subjects. So, for example, if subject 17 drops out, the system will automatically assign a subject to this column as per your specified criteria.

- Free Drawing Element: Soon, you will have the option to enable a Free Drawing element in your study. This will allow participants to use their mouse to draw or trace freely as a response!

Q3’22 Release Note

The New Public Experiment Library is Now Live! Have you tried out our new public experiment library? We have redesigned the library, making it easier to search through published studies, templates, and demos. The library is now a table, so you can filter and search and sort according to your needs, you just clicked the green ‘ADVANCED SEARCH’ button in the upper left corner. You will see various fields and options for sorting the library, such as: Study Name or ID, Author Name, and Affiliation (university). You can also select whether you want the displayed studies to include Eye Tracking or specify whether it’s Multi-User or under which Category/Branch of psychology it falls under. Now, you can also copy the URL of your query and share it with others

Soft Delete Provision for One Month: Now, when users want to delete their data from their servers, we have a soft delete provision where it takes one month automatically to be deleted permanently. This window is like a safety net that allows you to change your mind or if there was a mistake. For example, if the user somehow deleted the recording data either by enabling/disabling the recording or through the DataExport page, now it is possible to restore it. To restore it, you just send a request ticket to the Labvanced support team and a Labvanced administrator can restore it. But, this must happen before a full month passes from the date the deletion occurred.

Range Element: The range element in the app editor now has additional options for customizations, including:

- Hiding the range element handles

- Making the range element required, so that the user MUST interact with it, or the experiment does not progress.

Elements' Visibility in the Editor: Another feature we have added has to do with editor visibility and locking. Now, elements can be locked in a particular position in the editor and any movement or clicking on it will not have an effect as long as it is locked. Also, the elements’ visibility can now be toggled.

Sending a Final Reminder Email to Participants: For longitudinal studies, participants are expected to perform an experiment and/or complete questionnaires at multiple points. Thus, we offer an automatic way to email participants reminders. Before this improvement, we were sending 2 emails per session, now we send out 3 emails and have already seen a reduction in attrition rates as a result with the third email being sent out a few hours before the closing time of the session.

Preventing Deletion of Groups, Sessions, and Blocks: We have removed the deleting mechanism of Sessions, Groups, Blocks in the study editor page, but only if the recording is already and the study is live. This prevents crashes and unexpected user results. Before this improvement, users could have 2 groups and data would be recorded for each group and would delete a group while leaving the experiment on ‘data recording active.’ However, when you delete a group (or session or block), a mismatch is created between the existing data and the deleted session / group / block.

Now that the deleting mechanism is gone, if you want to change the experimental design (by removing sessions/groups/blocks) you have two options:- Secure data locally by downloading it and then delete the data in the Labvanced app after you have your copy. Then, go back to the editing more in the same study and change the experimental design accordingly.

- Alternatively, you can create a new draft from the study by copying the original study, creating a new study and then update the experimental design (by removing the session/group/block) in the copy.

Unexpected Behaviour of Trial and Frame Timestamp: When creating an experiment and adding a variable, sometimes users forget to uncheck the 'Reset trial variable' option under the variable properties section. This leads to some unexpected NaN values in timestamps. To avoid this, we have added an alert message while creating the event. Sometimes a trial variable will need to be reset at the beginning of a new trial. In other cases, it is important to have the same trial variable across the experiment. For example, you might want to access a variable from a task earlier (using the age from a questionnaire at a previous trial and then accessing it at a later point in a different trial).

Auto Enabling of Survey Data Variables in DataExport Page: In the new DataExport page, the survey data variables (such as: Gender, Age, Language, Country etc.) will automatically be part of the export page if any data is recorded in any of them or enabled by the study owner. Previously it was the manual process to enable each of these variables from the variables page.

Optimized Functionality of DataExport Page: In addition to the above, we fixed an issue with download requests from the DataExport page. Some users were experiencing it crashing and this was because there was an unnecessary call to the server being performed in the background, taking extra time to request the time. We fixed this issue and reduced the amount of serving time for download requests.

In Progress: The following new features and improvements are currently in progress and our team is working hard to complete them:

- New Home Page: We are designing a new website. It will have a modern look and feel as well as more content and helpful information for our users.

- Dashboard Revamp: We are also working on a new design of the app dashboard when you login which will be more intuitive and more actionable with advanced information and features.

- Cross Session Data Access: With this feature, it will be possible for participants to access data produced by another participant. This will allow for the comparison of a subject’s performance to the general population and distribution of performance of all former subjects. Another scenario of this feature would be to access past data in a longitudinal study for the same subject, enabling for the tracking of individual performance changes across sessions.