Labvanced Eye Tracking

Contents:

- Introduction: Innovative and Accurate Webcam Eye Tracking

- Peer-Reviewed Publication: Webcam-based Eye Tracking from Labvanced

- Background - What is Webcam Eye Tracking?

- Our Process: How does Labvanced’s webcam eye tracking work?

- About System Architecture and Eye Tracking Data Flow

- Key Features of Labvanced’s Webcam Eye Tracking

- Sample Data and Metrics from Webcam Eye Tracking

- Examples of Incorporating Webcam Eye Tracking Data in Experiment Design

- Research Papers Using Labvanced's Webcam-based Eye Tracking

- Labvanced Library Studies

Introduction: Innovative and Accurate Webcam Eye Tracking

Researchers from all over the world have employed our webcam-based eye tracking technology in order to better understand attention.

Now, with a peer-reviewed publication on hand, you can make use of innovative webcam-based technology with confidence.

Read this peer-reviewed paper in the journal of Behavior Research Methods comparing the accuracy of our webcam-based eye tracking solution to EyeLink.

Peer-Reviewed Publication: Webcam-based Eye Tracking from Labvanced

KEY FINDINGS

- Labvanced’s webcam-based eye tracking has an overall accuracy of 1.4° and a precision of 1.1° with an error of about 0.5° larger than the EyeLink system

- Interestingly, both accuracy and precision improve (to 1.3° and 0.9°, respectively) when visual targets are presented in the center of the screen - something that’s important for researchers to take into account given that the center of the screen is where stimuli are presented in a lot of psychology experiments.

- For free viewing and smooth pursuit tasks, the correlation was around 80% between Labvanced and EyeLink gaze data. For a visual demonstration of how this correlation looks like, see the image below that shows the overlap between the data points between Labvanced (blue dots) and EyeLink (red dots).

- Also, the accuracy was consistent across time.

Fig 1. Graphs from the research paper (taken from the publication - Fig.7), a visual demonstration of how this correlation looks like, in a Smooth Pursuit Task, showing the overlap between the data points between Labvanced (blue dots) and EyeLink (red dots). For free viewing and smooth pursuit tasks, the correlation was around 80% between Labvanced and EyeLink gaze data.

Cite this paper:

- Kaduk, T., Goeke, C., Finger, H. et al. Webcam eye tracking close to laboratory standards: Comparing a new webcam-based system and the EyeLink 1000. Behav Res (2023). https://doi.org/10.3758/s13428-023-02237-8

Background - What is Webcam Eye Tracking?

Online eye tracking was first done a little over 5 years ago by Web Gazer and is still used today. While this solution works with there being a signal across a 4-quadrant plane which can distinguish between left/right and up/down, it has received criticisms as being unrefined because of the high error rate, especially when you move your head which is bound to happen.

Although Web Gazer has been a pioneer in bringing eye tracking to the worldwide web, it cannot be reliably used for scientific purposes and rigorous experiments. Some other alternatives do exist, for example tools for UX, but currently, there are no other is no other web eye tracking software that can be used online with a scientific context for psychological experiments to study complex processes like visual attention and cognition.

Our journey began over 3 years ago, where we implemented a deep neural network to take visual data from a webcam and analyze it to provide information about the participant’s eye and head movement.

This video describes Labvanced's webcam eye tracking, as well as its accuracy:

Our Process: How does Labvanced’s webcam eye tracking work?

With our web-based eye tracking software driven by a deep neural network, a webcam image can be translated into data points through the following process:

Capture webcam images: From an enabled webcam, multiple frames are taken in realtime, creating multiple images from which an analysis for face detection will be conducted. At this step, the user’s graphic card is an important component because artificial intelligence learning relies heavily on a graphics card (more on this in the next section).

Derive two main data points: While performing face detection using a deep neural network, two main data points are derived.

- Relevant image data from the area around the eyes: the pixels that show the eyes and the area around the eyes.

- Head position and orientation: the pixels that show where the head is positioned and its orientation in space.

Perform calibration: Finally, something all eye tracking needs is calibration which is subject-specific due to individual differences relating to physical characteristics. Our calibration is a strong web-cam feature that arises from the integrated neural network.

About System Architecture and Eye Tracking Data Flow

Let’s take a closer look at the process and how eye tracking is handled.

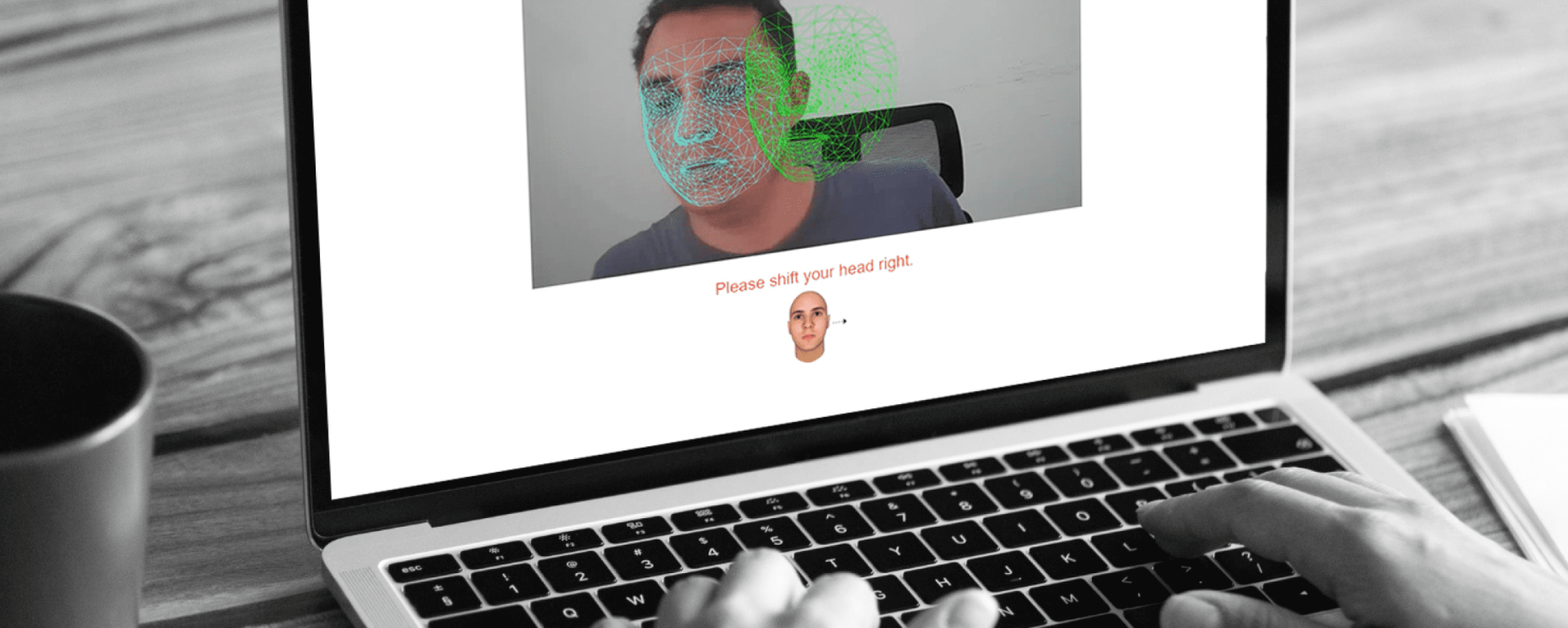

First, as established previously, we get a webcam image and we run a face detection algorithm (to create a mesh around the facial features). This is all happening really quickly and in real time on the user’s device, not on remote servers.

The tradeoff between doing things in real time and on the user’s device is that that means we are working with finite resources allotted by the user’s computer/device capabilities. However, let it be noted that there is a way around this with post-hoc processing.

Advantages of Labvanced’s Approach to Real-Time Webcam Eye Tracking

While real-time processing is done with limited resources, there are some strong advantages to doing this, such as:

- Real privacy: By performing the calculations on the user’s device (instead of the company’s remote servers), it means there is real privacy for the participant. We never see any face data because it never leaves the participant's device. In essence, the neural network processes the participant-specific gaze-relevant points and the only data that is communicated beyond the participant's device are numeric values, such as x- and y-coordinates. On a related note, Labvanced adheres to GDPR and has VPAT security certifications and provides all such necessary documents to customers who request them.

- Sustainability: Another benefit to real-time processing, in addition to privacy, is sustainability. Locally, video analysis data can be handled more easily. By contrast, when sending data to a remote company server, costs increase, taking more time and money to process. Thus, it is more sustainable and economic to run things locally with finite resources, rather than remotely with servers and infinite resources.

Key Features of Labvanced’s Webcam Eye Tracking

High Accuracy: Webcam eye tracking comes second to hardware-based eye tracking. However, given its affordability and the usability and flexibility it offers, webcam eye tracking is a powerful and viable alternative for many researchers. Labvanced's webcam eye tracking is the leading solution for providing accurate and reliable gaze data.

Customizable Calibration: Our eye tracking technology checks and recalibrates according to your ever-changing environment. This happens automatically but it is customizable. The default is for realibration to occur in the background after 7 trials. The system recalibrates itself after reassessing for influencing factors like luminance changes.

Virtual Chin Rest: During eye tracking experiments, a face mesh is created over the user’s face. Before an experiment begins, the participant must align the mesh that appears over their face with a target meat. This created a virtual chin rest that ensures the participant stays within an acceptable zone. When the participant moves away from the chin rest, the task is interrupted and the participant is asked to realign with the virtual chin rest. Try it out yourself here.

Learn more about the virtual chinrest in this video:

Performance check: We also offer a performance check, letting you rest assured that the data from the participants meets your standards. With web-based eye tracking, the speed and performance of the client’s computer can be quantified and the researcher can choose which participants’ data to include in their study. This way, when a participant has a really slow running computer (which would impact the integrity of their results), you will know and you can omit this data from your data set.

Privacy-Client-side Calculation: As explained previously, we don’t take any data or recordings to our servers. All processing happens locally on the client’s device, allowing for real privacy.

Infant-friendly eye tracking: When creating an experiment with eye tracking in Labvanced, you can use the infant-friendly eye tracking preset settings if you are working with toddlers. We have successfully deployed studies from many universities focusing on this special population and have adapted the technology according to inputs and needs coming directly from researchers. Read more about infant-friendly eye tracking.

Sample Data and Metrics from Webcam Eye Tracking

Eye tracking produces many different metrics which are then used for data analysis and drawing conclusions about your experimental question. This data heavily relies on gaze location, ie. the x- and y-coordinates of gaze.

The majority of these metrics are generated during the data analysis stage, after data collection. The basis of these metrics is gaze measurements. If you know the gaze position point at any given time, you can calculate the remaining metrics.

- Gaze location

- Areas of Interest (AOI)

- Number of fixations

- Revists

- Dwell time / time spent

- Time to first fixation

- First fixation duration

- Average fixation duration

- Fixation sequences

This video discusses the data from our eye tracking technology:

Collecting Eye Tracking Data from Labvanced

With a few clicks, you can set up your experiment to record the data you desire by selecting one of the many options and creating variables to record them.

![]()

Fig. 2: Setting variables in Labvanced’s eye tracking app to record experimental data about gaze.

Here, in Fig. 2. a variable is created to record the eye gazing X- and Y-coordinates, time stamps, and confidence levels.

A sample data set from Labvanced’s eye tracking app is shown below in Fig. 3.

![]()

Fig 3: Time series data view display with the last four columns of: x-coordinate, y-coordinate, UNIX time, and confidence scores.

The confidence level (column C) has to do with how confident we are of the measurement and is impacted by when blinking occurs. Measurements from wide-open eyes receive more confidence than measurements from an eye that is half-open or in the process of blinking.

Understand how eye tracking is set up in a study in Labvanced by following and refering to this walkthrough where SVGs are used to detect fixations over Areas of Interest (AOIs) or this other step-by-step walkthrough where eye tracking is used in an Object Discrimination Task.

Examples of Incorporating Webcam Eye Tracking Data in Experiment Design

When setting up your study on Labvanced, you can set up the variables and events to do many things, for example, you can use:

- Gaze as the input / response: During the experiment, you can use gaze to select one of the two objects by looking at it for a predetermined amount of time.

- Gaze to control something: Instead of having the participant use keyboard inputs to control elements in the experiment, you can create a feedback response so that users can control or select objects in real time with their gaze.

- Gaze to affect a property change: In this example, you can control gaze-contingent displays. A classic example of this is change blindness. So in an experiment, you can track when a participant looks at the left side of the screen and when this happens, you can change a property (like color) of an object on the right hand side.

- Gaze to dynamically control the flow of an experiment: Eye movements can be used to determine how an experiment trial sequence progresses. For example, if a participant looks at red stimuli, then another trial will follow with red stimuli as opposed to one with blue stimuli.

- Gaze broadcasting in social experiments: In multi-user experiments, the gaze can be ‘broadcasted’ from one person to another, that way a participant can see where their partner or opponent is looking while performing a 2-person experiment together.

- Gaze to track reading tasks: Linguistic research uses this aspect of eye tracking to measure reading passages and quantify how much time participants needed to complete reading a passage.

- Gaze for quality control in crowdsourced experiments: By activating eye tracking in crowdsourced experiments, a researcher can ensure that participants actually spent some time reading the task instructions.

Check out this video where we walk through creating an eyetracking study with special settings and features such as chat boxes, variable distribution, and feedback:

Research Papers Using Labvanced's Webcam-based Eye Tracking

Below is a collection of recently published research papers that make use of Labvanced's webcam eye tracking as a part of their methodology for capturing gaze:

Beyond built density: From coarse to fine-grained analyses of emotional experiences in urban environments

Sander, I., et al. (2024).

Journal of Environmental PsychologyThe effect of processing partial information in dynamic face perception

Alp, N., Lale, G., Saglam, C., & Sayim, B. (2024).

Scientific ReportsUnconscious Frustration: Dynamically Assessing User Experience using Eye and Mouse Tracking

Stone, S. A., & Chapman, C. S. (2023).

Proceedings of the ACM on Human-Computer InteractionDynamics of eye-hand coordination are flexibly preserved in eye-cursor coordination during an online, digital, object interaction task

Bertrand, J. K., & Chapman, C. S. (2023)

Proceedings of the 2023 CHI Conference on Human Factors in Computing SystemsFinding Goldilocks Influencers: How Follower Count Drives Social Media Engagement

Wies, S., Bleier, A., & Edeling, A. (2022).

Journal of Marketing

Labvanced Library Studies

The Labvanced library is full of study templates that can be imported and adjusted to include eye tracking. Below we provide a few example studies that demonstrate our eye tracking technology:

- Target Distractor Task: A classic eye-tracking paradigm (target - ‘T’ distractor ‘P’ have to look at the target immediately to find it.

- Infant-Friendly Eye Tracking: A task based on preferential looking which begins with infant-friendly calibration.

- Free Viewing: Shows a variety of images and explores where the participant’s gaze on the image. Useful for experiments wanting to predict where a participant will look on the image based on existing image features.

- Gaze Feedback Test: Here you can see your own gaze because a red circle appears, immediate feedback that predicts where you are looking and shows a red circle in that spot.

- Spatial Accuracy Test: A standardized calibration test where participants fixate in different locations on a grid, then the fixation is taken for each of the points and the distance is compared between the point on the grid and the predicted point.

Again, try this step-by-step walkthrough and practice building a sample eye tracking study in our app!

Need More Info?

If you need more support or are curious about a particular feature, please reach out to us via chat or email. We are more than happy to hear about your research and walk you through any questions about the platform and discuss project feasibility.