Music Research with Labvanced | Psychology Experiments

Here are a few examples of music research findings from scientists that used Labvanced to run their psychology experiments and collect data! Below, you can find the music research paper topics, titles, key findings, as well as a general overview of how they set up their music psychology experiment in Labvanced.

Research on music spans several topics. Below we grouped them into themes / research topics:

Contents - Music Research On:

- Appreciation / Aesthetics: Research papers about appreciation and aesthetics as relating to music, including research on the psychology of music preference.

- Cognition: Includes examples of research on music and emotions, as well as research on music and personality.

- Music Education and Performance: Findings on music education research topics, such as performance and score reading.

- Music & Language: Research on the relationship between music and language, as well as music and memory.

- Social Psychology & Music: Findings from multi user studies focusing on music research.

- Notable Features for Music Research Experiments: Highlighted features in Labvanced useful for conducting research on music.

1. Research on Music Appreciation / Aesthetics

The role of audiovisual congruence in perception and aesthetic appreciation of contemporary music and visual art

- Authors / Journal: Fink, L., Fiehn, H., & Wald-Fuhrmann, M. (2023) in PsyArXiv

- Overview: Aims to determine if the ‘Kiki-Bouba effect’ transfers to complex / multi-dimensional stimuli like contemporary art and music. Research is in collaboration with the Kentler International Drawing Space (NYC, USA) with the material taken from the Music as Image and Metaphor exhibition where music was specifically composed for each work of art.

- Labvanced experiment: The online experiment consisted of 4 conditions: Audio, Visual, Audio-Visual-Intended (artist-intended pairing of art/music), and Audio-Visual-Random (random shuffling). Participants (N=201) were presented with 16 pieces and could click to proceed to the next piece whenever they liked. After each piece, they were asked about their subjective experience. Available here: https://www.labvanced.com/player.html?id=33023

- Key finding: The Audiovisual-Intended pieces (ie. the pieces where the music composition was created for the specific artwork) were perceived to have greater correspondence than those in the Audiovisual-Random condition.

Example of artwork from the experiment / exhibition

Perceptual (but not acoustic) features predict signing voice preferences

- Authors / Journal: Bruder, C., Poeppel, D., & Larrouy-Maestri, P. (2024) in Scientific Reports

- Overview: The study aim was to predict what drives participant’s preferences when ‘liking’ a vocalist by assessing perceptual and acoustic features, an important topic in the area of psychology of music preference.

- Labvanced experiment:

- Perceptual ratings were developed for this experiment on bipolar scales ranging from 1 to 7 with contrasting anchor words on each pole asking participants to rate the following: pitch accuracy, loudness, tempo, articulation, breathiness, resonance, timbre, attack/voice onset, vibrato. Forty-two participants rated 96 stimuli on 10 different scales

- 18-item subscale of the Music Sophistication from the Goldsmiths Music Sophistican Index

- Ten-Item Personality Inventory (TPI)

- Reviewed Short Test of Music Preference (STOMP-R)

- Key finding: Acoustic and low-level features derived from music information retrieval (MIR) barely explain variance in the participants’ liking ratings. In contrast, perceptual features of the voices achieved around 43% prediction suggesting that singing voice preferences are not grounded in acoustic attributes per se, but more so by features perceptually experienced by the listeners. This finding shows the importance of individual perception when it comes to the psychology of music preference.

Parallelisms and deviations: two fundamentals of an aesthetics of poetic diction

- Authors / Journal: Menninghaus, W., et al. (2024) in the Philosophical Transactions of the Royal Society B

- Relevance: Research on music perception has shown that properties like rhythmic and melodic features build up expectations in listeners which impact their aesthetic experience. Thus, melodic properties of poem recitations enhance the perceived aesthetic and musical properties of recited poems.

- Study: Researchers developed novel quantitative measures to capture the frequency/density of patterns of parallelism and deviation for the poems, proverbs, and humoristic couplets presented in this study. The scores were used as predictors of the participants’ cognitive and aesthetic evaluations. The participants were divided into groups based on their music preferences and ability, using measures like the musical rhythm ability test (RAT). Corpora of various text genres were used as stimuli and participants had to rate the texts based on three dimensions: cognitive processing, aesthetics, and music-analogous.

- Findings: Results include that the Parallelism score was a predictor of Melodiousness; the scores for Deviations vs. Parallelisms (across all the text genres) were powerful for predicting positive vs. negative cognitive and aesthetic effects, showing the importance of predictability for aesthetic judgement.

2. Research on Music and Cognition

Modulation through Music after Sadness Induction - The Iso Principle in a Controlled Experimental Study

- Authors / Journal: Starcke, K., Mayr, J., & von Georgi, R. (2021) in the International Journal of Environmental rRsearch and Public Health

- Overview: This study falls within the area of research on music and emotions and demonstrates how music can modulate emotional states.

- Labvanced experiment: A combination of scales and tests were used as a part of the music psychology experiment to assess the influence of music on emotion after being successfully induced into a ‘sad’ state by watching a movie clip, while taking into consideration the participants’ individual characteristics. Key elements used in the study:

- German translation of the Short Test of Music Preferences (STOMP) - measures genre preferences

- German version of the Positive and Negative Affect Schedule (PANAS) - measures trait positive / negative effect

- Short Eysenck Personality Profiler with NEO-PI-R Openness (SEPPO) - measures participants’ personality

- Inventory for the assessment of Activation and Arousal modulation through Music (IAAM) - participants’ use of music in everyday life

- For the induction of sadness, a movie clip from the movie “The Champ” (1979)

- Four pieces of music were used in this study: two of them were happy [“Blue Danube” (Johann Strauss, 1867) and the Romance from “A little Night Music” (Wolfgang Amadeus Mozart, 1787)] and two of them were sad [“Kol Nidrei” (Max Bruch, 1880) and “Suite in A-Minor” second movement (Christian Sinding, 1889)]. The pieces were mainly chosen based on a previous study which established the respective valence ratings of these pieces and accompanying brain activations.

- Self-Assessment Manikin (SAM) - measure emotional state during the experiment, participants rated their current emotional valence

- Findings: The group of participants who listened to the sad music first and the happy music afterwards ultimately reported a higher positive affect, a higher emotional valence, and a lower negative affect compared with the other groups. This contributes to the field of research on music and emotions by showing how affect is modulated by music based on the current emotional state.

- Check out this Researcher Interview with Katrin Starcke about the research above

Craving for music increases after music listening and is related to earworms and personality

- Authors / Journal: Starcke, K., Lüders, F. G., & von Georgi, R. (2023) in the Psychology of Music

- Overview: The study aimed to investigate the craving for music and its psychological correlates.

- Labvanced Experiment: Participants’ craving for music was assessed via questionnaire before and after they listened to a song. In addition, earworms before and after music listening were assessed. Finally, personality traits were measured. The following measures and tasks were used:

- Short Test of Music Preferences - assessments of musical preferences

- Short Eysenck Personality Profiler and the NEO PI-R Openness scale - to assess personality

- A modified version of the Desires for Alcohol Questionnaire - to establish the participants’ current craving for music

- The Musical Imagery Questionnaire, modified to ask about earworms as a current state - to determine the strengths of potential earworms

- Participants listened to a song they chose from a list of eight songs: “Riders on the Storm” (The Doors), “Let It Be” (The Beatles), “Gangsta’s Paradise” (Coolio), “Shape of You” (Ed Sheeran), “Ievan Polkka” (Loituma), “Smells Like Teen Spirit” (Nirvana), “September” (Earth, Wind, and Fire), and “Despacito” (Luis Fonsi ft. Daddy Yankee)”.

- Findings: The results indicated that craving for music significantly increased after listening to a song. The same was observed for earworms. Craving for music and earworms were significantly related, and baseline craving was also related to certain individual traits like psychoticism and openness to experience.

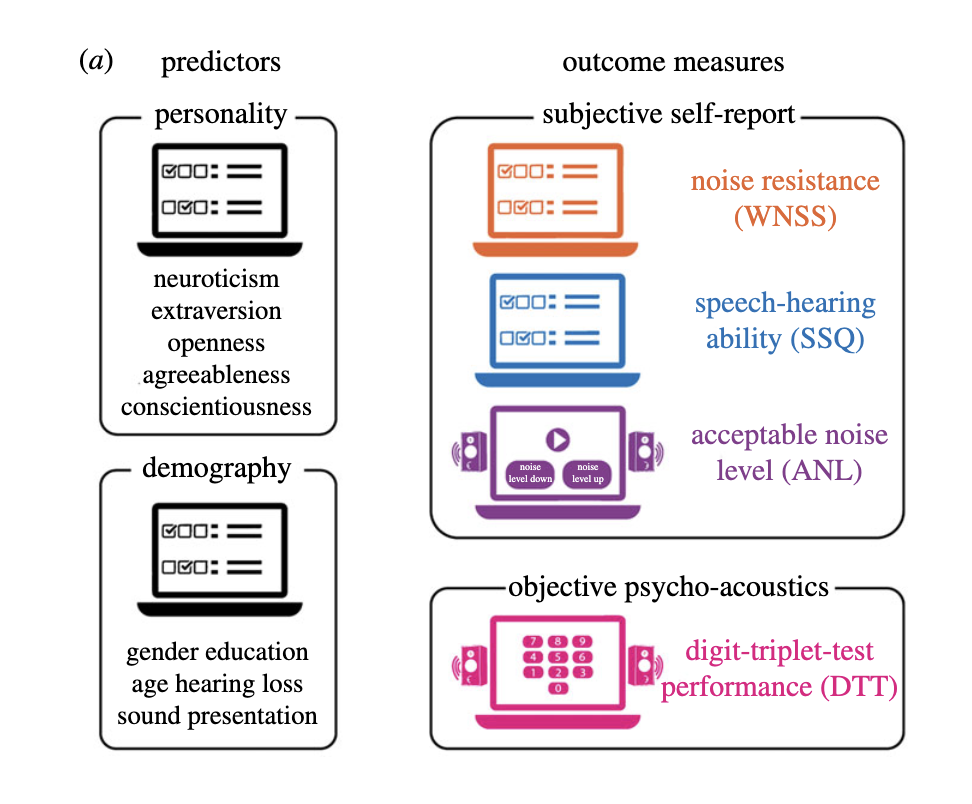

Personality captures dissociations of subjective versus objective hearing in noise

- Authors / Journal: Wöstmann, M., Erb, J., Kreitewolf, J., & Obleser, J. (2021) in Royal Society Open Science

- Overview: The interplay between noise, music and perception is relevant for music research. Sensitivity to noise can explain differences in people’s listening behavior such that higher noise sensitivity can be associated with a decreased time spent listening to music passively.

- Labvanced experiment:

- Demographic information also collected data on musicality (ie. the number of years playing a musical instrument and the age the participant started playing a musical instrument).

- BFI-S: personality questionnaire, where the Big-5 dimensions are assessed

- WNSS: Noise resistance questionnaire

- SSQ: speech-hearing ability questionnaire

- ANL: acceptable noise level test

- DTT: adapted digit-triplet-test to determine speech-in-noise reception

- NASA-task load index: after the DTT, participants were presented with two short questionnaires to assess some aspects related to listening effort.

- Findings: Lower neuroticism and higher extraversion independently explained superior self-reported noise resistance, as well as speech-hearing ability and acceptable background noise levels. Interestingly, higher levels of neuroticism showed objective speech-in-noise recognition to increase. Related to this, the bias where one over-rates their own hearing in noise was shown to decrease with higher neuroticism yet increase with higher extraversion. Such findings have implications for hearing in noise and audiological treatments with respect to individual differences.

- Check out this Researcher Interview with Dr. Malte Wöstmann discussing the research above.

3. Research on Music Education and Performance

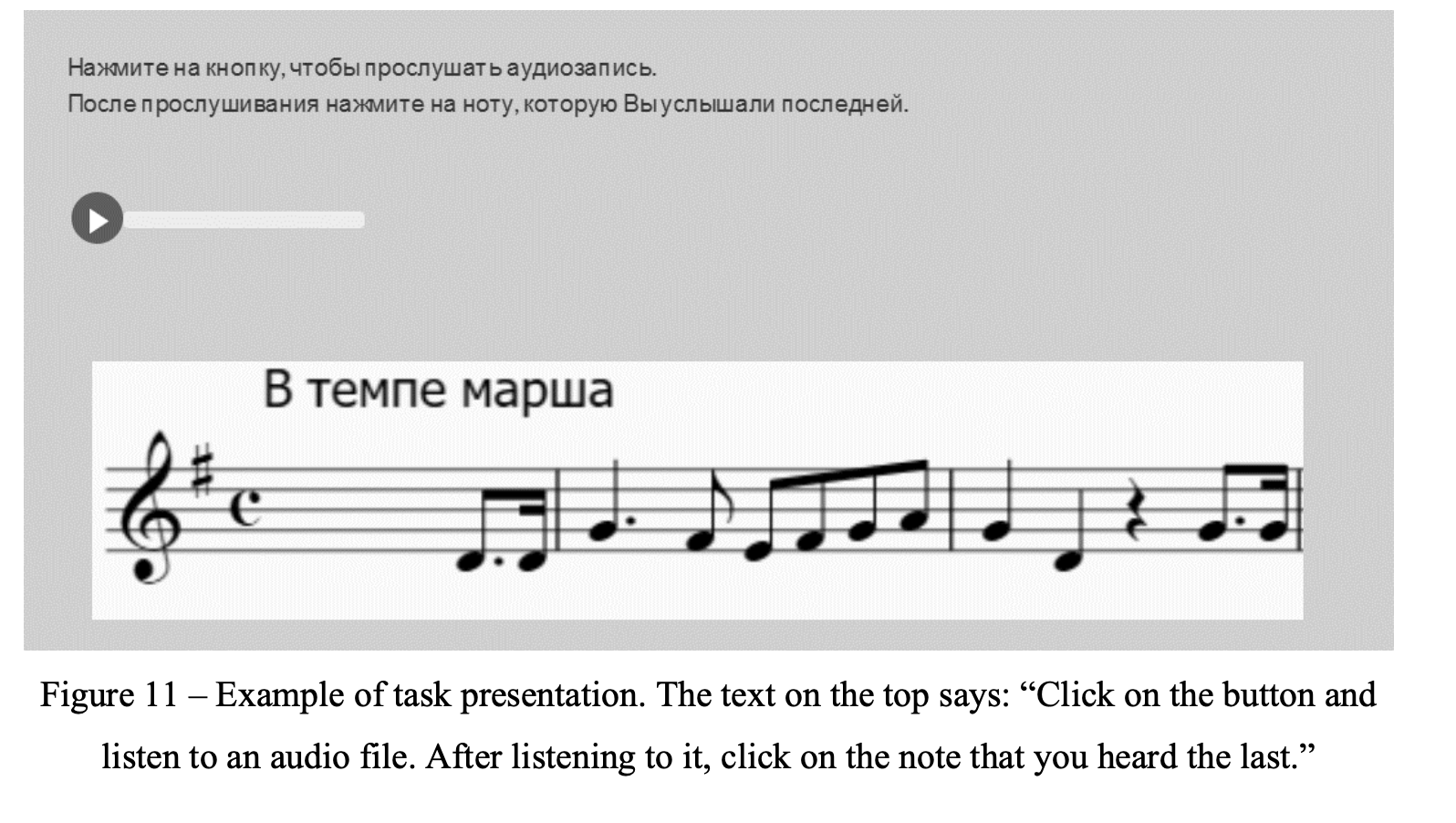

Attention Distribution During Music Score Reading

- Author: Bushmakina, A.N. (2023) Student Thesis from Tomsk State University

- Overview: The aim of this research was to determine objectively how good one’s music score reading is by quantifying correct responses and overall response time.

- Labvanced experiment: There were 35 musical pieces chosen in total across several different information levels, more specifically: no information (where only auditory discrimination of individual sounds can be applied), pitch, rhythm, full information (the unmodified musical notation that presents pitch and rhythm in their interaction), and note names. For every musical piece, a pair of visual and auditory stimuli was created. A visual stimulus consists of the first twelve notes of the piece either in unmodified or modified notation form, depending on which information level it belongs to. The image below represents an example task of the ‘full information’ condition where the participant is instructed to: “Click on the button and listen to an audio file. After listening to it, click on the note that you heard last.” The audio plays and stops at a certain point and the participant must indicate at what point in the corresponding music score the sound stopped by clicking on the correct note on the sheet.

- Results: Full information tasks took musicians the least time to perform, which indicates that musicians are well capable of navigating sheet music in its full form. Arranging the tasks according to the total performance efficiency (estimated from the least amount of error weight and the smallest response time in that order of priority) the following was observed for musicians: full information, pitch, note names, rhythm, no information. While the information level arrangement from best to worst performance for non-musicians was as follows: note names, no information, pitch, rhythm, full information.

Classical signers are also proficient in non-classical singing

- Authors / Journal: Bruder, C., & Larrouy-Maestri, P. (2023) in Frontiers in Psychology

- Overview: The aim of this music psychology experiment was to determine how proficient classical signers are in other genres.

- Labvanced experiment: Twenty-two highly trained female classical singers (with vocal training ranging from 4.5 to 27 years) performed six different melody excerpts a cappella in several genres: as an opera aria, as a pop song and as a lullaby. All melodies were sung both with lyrics and with a /lu/ sound which were later used as stimuli that participants would rate / classify. The singers’ vocal productions were acoustically analyzed in terms of seven common acoustic descriptors of voice/singing performances and perceptually evaluated by a total of 50 lay listeners (aged from 21 to 73 years old) who were asked to identify the intended singing style in a forced-choice lab experiment. The participants were instructed to indicate if the stimulus (the piece that the classical singer was singing) sounded like a lullaby, a pop song, or an opera aria, by clicking on the respective answer. One group of participants (Group 1, N = 25) was presented only with the performances that contained lyrics (395 trials) while the other group of participants (Group 2, N = 25) heard only with performances with /lu/ sounds (393 trials).

- Results: There was a high level of overall style recognition rate based on correct responses (CR) at 78.5%. The singers’ proficiency levels for performing in operatic style was 86% CR, for lullaby 80% CR, and pop performances 69% CR. Results also demonstrated occasional confusion in rating between the latter two conditions. Interestingly, different levels of competence among individual singers appeared, with versatility ranging from 62 to 83%. The researchers noted that this variability "was not linked to formal training per se."

4. Research on Music & Language

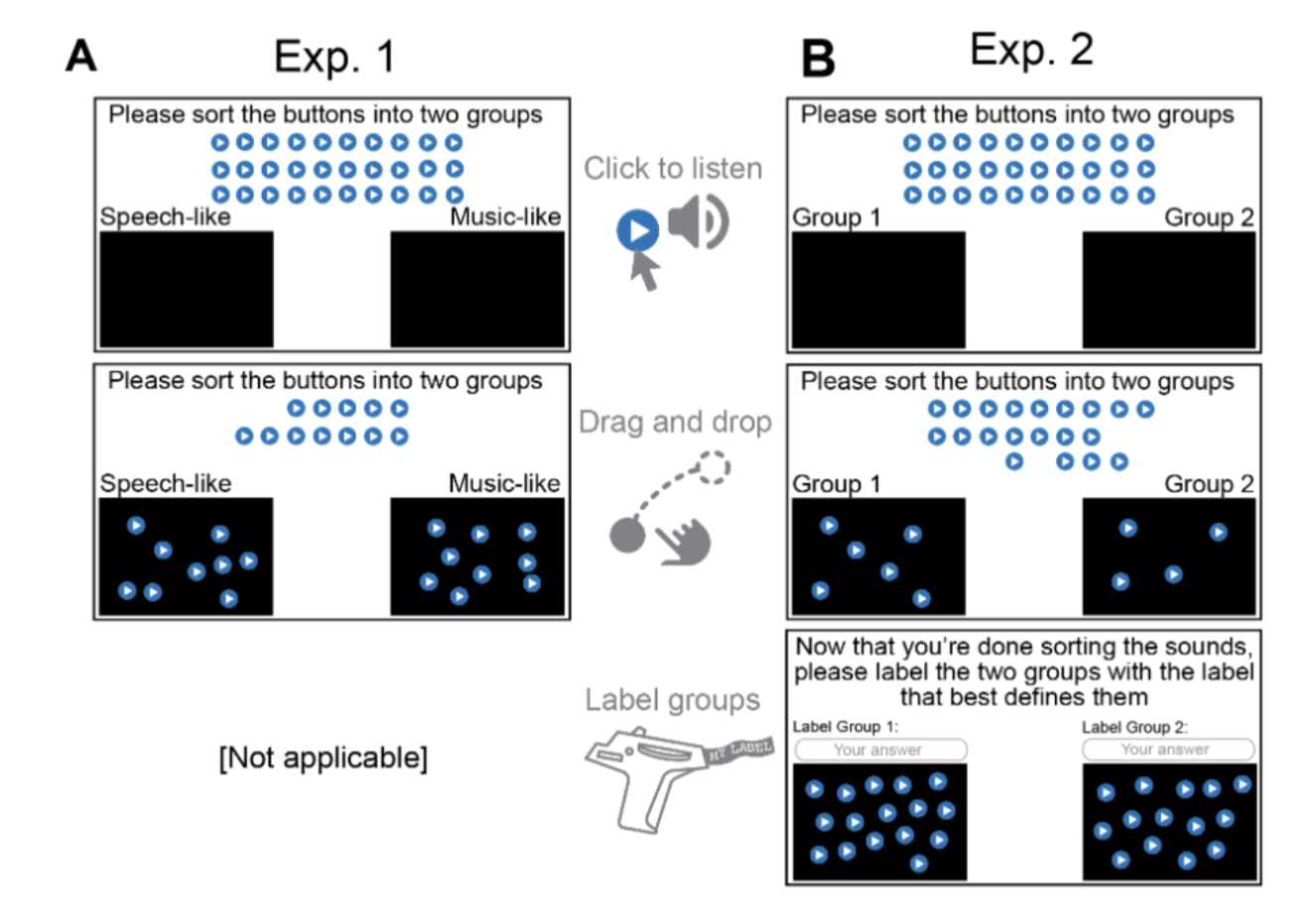

Features underlying speech versus music as categories of auditory experience

- Authors / Journal: Fink, L., Hörster, M., Poeppel, D., Wald-Fuhrmann, M., & Larrouy-Maestri, P. (2023) in PsyArXiv

- Overview: This study conducted research on music sounds by asking participants to classify clips as being ‘speech-like’ or ‘music-like’. A different set of participants was prompted to classify the sounds into two groups without being prompted what theme to use.

- Labvanced experiment: Using 30 recordings of recordings of dùndún drumming (a West-African drum which is also used as a speech surrogate), participants were asked to classify the recordings. The researchers aimed to determine potential predictors of music-speech categories. 15 of the recordings were treated as ‘music’ and consisted of Yorùbá àlùjó (dance) rhythms, while the other 15 recordings were ‘speech surrogate,’ containing Yorùbá proverbs and oríkì (poetry). The experimental set up in Labvanced instructed the participants to drag & drop to categorize the stimuli which the participants could play freely to listen (see image below). Different participants took part in each experiment. In the first experiment, the categories were provided, namely as ‘speech-like’ and ‘music-like’ while in the second experiment, the participants had to determine what the two categories were differentiating the sounds and subsequently label them.

- Findings: Hierarchical clustering of participants’ stimulus groupings shows that the speech/music distinction does emerge and is observable, but is not primary. Further analysis of the free-response task showed that the labels assigned by the participants converge with acoustic predictors of the categories. Such a finding supports the effect of priming in discriminating between music and speech, and thereby sheds a new light on the mechanisms of categorizing of such common auditory signals.

Non-native tone perception - When music outweighs language experience

- Authors / Journal: Götz, A., & Liu, L. (2023) in ICPhS 2023

- Overview: The aim of this research was to determine how language (e.g., bilingualism, L2) and music experiences (e.g., years of practising) improve lexical tone perception. In this study, 532 participants from L1 Mandarin, L1 non-tone, bilingual L1 non-tone & L2 non-tone, and bilingual L1 non-tone & L2 tone backgrounds were tested on their abilities to discriminate between various Mandarin tones.

- Labvanced experiment: AXB discrimination task - Participants were asked to press a key as accurately and quickly as possible if the second syllable was more similar to the first one (AAB, via key 1) or the third (ABB, via key 3) syllable. The interstimulus interval was 1000 ms and the intertrial interval was 3000 ms. The time-out of the response time was set to 2500 ms, measured at the end of the third syllable. The stimuli consisted of 12 monosyllabic Mandarin non-words with legal phonotactic structures. Each syllable was produced with the four Mandarin tones (T1, T2, T3 and T4). The length of each syllable was 250 ms. The final stimulus set consisted of 72 stimuli: 12 syllables x 6 tone contrasts (T1-T2, T1-T3, T1-T4, T2-T3, T2-T4, T3-T4).

- Findings: Results revealed that neither bilingual nor second (tone or non-tone) language experience affects novel tone perception. However, listeners’ years of music training significantly predicted perception outcomes regardless of the listeners’ language backgrounds. Such results show that learning a musical instrument can assist with tone perception across language groups and provide a cross-domain effect when it comes to processing linguistic and musical pitches. Furthermore, it demonstrates that language learning on its own may not guarantee advanced tone discrimination.

5. Research on Social Psychology & Music

Perceived Emotional Synchrony in Virtual Watch Parties

- Author: Drewery, D.W. (2022) Student Thesis from the University of Waterloo

- Overview: ‘Watch parties’ or streaming events virtually with friends is becoming more and more common. A virtual ‘watch’ party was simulated in Labvanced by telling the participant they were taking part in a study together with other participants and got to choose a video from a drop-down list: Culture, Recent History, or Music to watch together.

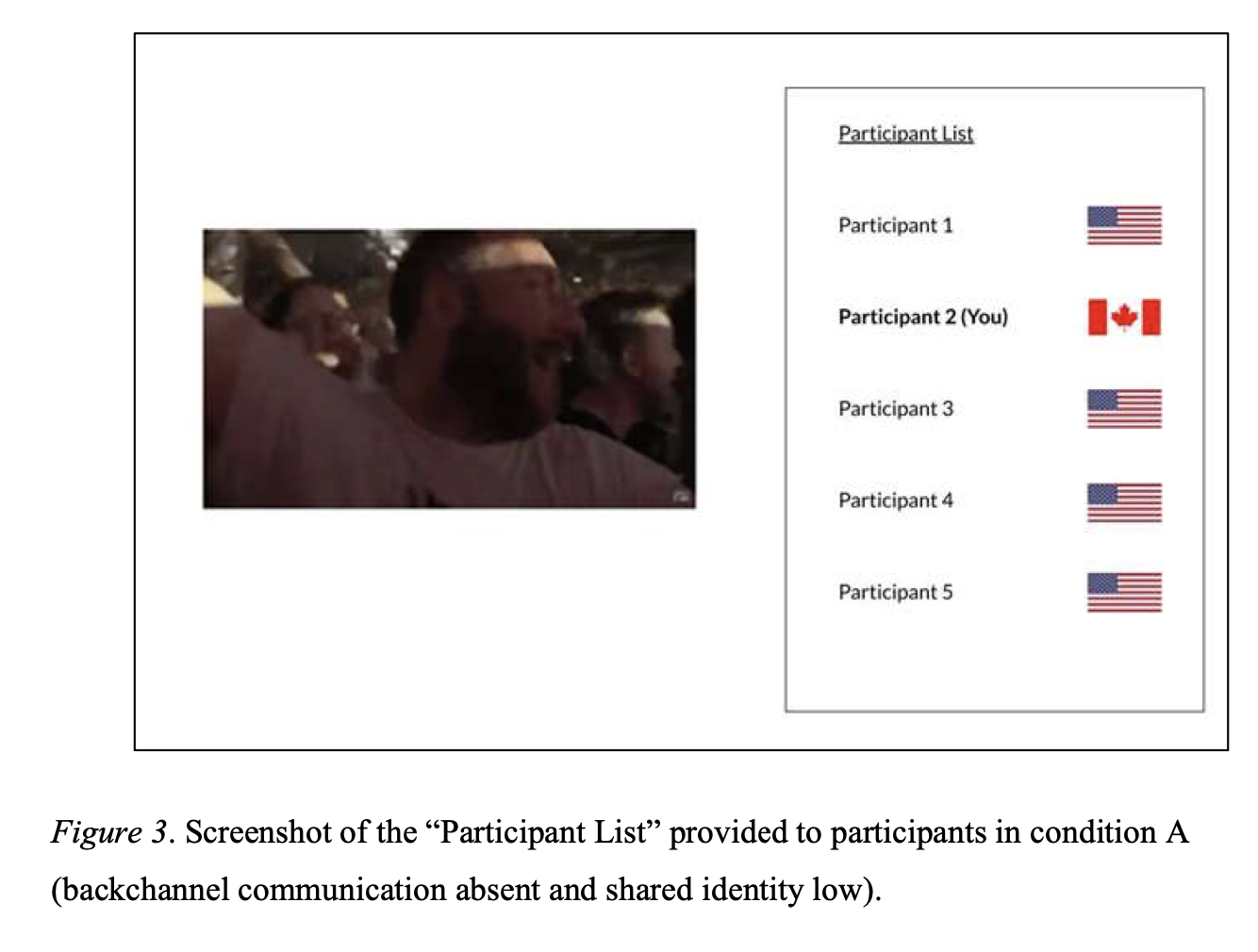

- Labvanced experiment: The presented video was the same for all conditions as it fit all three categories: it was the final song of a Canadian band playing in their farewell concert, a video for which 11.5 million viewers live streamed. Participants were randomly assigned to one of five conditions: control, high vs. low shared identity and absent vs. present backchannel communication (see image below for an example condition). Both factors were simulated. Participants filled our questionnaires based on their identity, perceived emotional synchrony with others, shared attention, mentalization and positive emotional responses.

- Findings: Results showed that virtual experiences were opportunities for perceived emotional synchrony. Shared attention was positively associated with perceived emotional synchrony even within a virtual setting, in this case, a virtual watch party. Furthermore, the social context itself of shared attention influenced perceived emotional synchrony. Perceived emotional synchrony, in the presence of backchannel communication, was 21% greater than in the absence of backchannel communication, a finding that is consistent with previous research on text-based emotional contagion.

6. Notable Labvanced Features for Music Research

- Connecting external devices like EEG with Labvanced

- No coding required - ideal for music research students (coding can be injected for complex projects)

- Control with actions like: ‘if/then’ logic and ‘while loops’

- Accurate stimulus presentation / can upload audio, images, video

- Account for variation by monitoring the participant’s device / internet & more

- Randomization / balancing to fit research needs, from automatic to complex approaches

- Screen recording

- Multi user studies

- Webcam-based eye tracking

- Mouse tracking (x,y coordinates)

- Time series data

- Longitudinal studies

- Smart phone app currently in Beta (can be used by researchers and participants in longitudinal studies, such as for gaging the effectiveness of interventions like music therapy)

Conclusion

Music research is a fascinating area of psychology where studies focus on understanding phenomena such as music preference, music and emotions, memory, and more. Current directions for research on music point to an increase in multi user studies where multiple participants can take part in a study. Also, the rise of innovative technologies like webcam-based eye tracking are bound to make their way in this field, as well as online settings will make it easier to implement novel music psychology experiment designs for studying various topics, like music notation reading. Research topics that are also growing of importance include music therapy and the impact of music on diseases like Alzheimer’s disease where a longitudinal study set up will be important to apply.